Apache Spark Interview Questions and Answers

Share This Post

Best Apache Spark Interview Questions and Answers

In this blog of top Apache Spark Interview Questions and Answers, you will get to know all the concepts of Spark that you need to clear the Spark job interview. Do you want to get placed in a company with your Apache Spark knowledge and skills? Do you? Get the job by facing the interviewer and answer lots of questions. We’re providing you with the frequently asked Apache Interview Questions and Answers for you to prepare well for the interview.

The Apache Spark Interview Questions are asked from the core concepts like Spark Architecture, YARN, components, RDD, in-built functions, Hadoop, MapReduce, lazy evaluation, libraries, etc. All the above concepts based Apache Spark interview questions and answers are covered in this blog. In order to get a little idea and brush up on the concepts of Hadoop and Apache Spark from CourseJet’s Apache Spark Training. Let’s deep dive into the Apache Spark Interview Questions to build your career.

Top Apache Spark Interview Questions and Answers

Apache Spark is one of the most prominent unified analytics frameworks designed for Big Data Processing. It also has built-in modules for performing various tasks like graph processing, streaming, machine learning, large-scale SQL, stream processing, batch processing, etc. Spark provides high-level APIs in different programming languages like R, Java, Python, Scala, and a lot more. Apache Spark can also distribute large-scale data processing tasks across multiple computers using distributed computing tools or on its own.

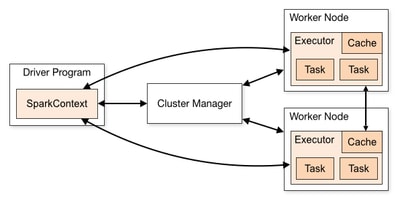

The below image provides you a nutshell of Spark Architecture.

Spark is the most popular data processing framework. It follows master-slave architecture. The Apache Spark cluster consists of multiple slaves and a single master. The Spark architecture depends on two abstractions such as Directed Acyclic Graph (DAG) and Resilient Distributed Dataset (RDD). Spark Applications run as an independent set of processes on clusters. The key role of RDD is to restore the data on failure and then distribute it among different nodes. After it, the data is grouped in the datasets. Directed Acyclic Graph is mainly used to perform data computation. From the above image, you will get a detailed understanding of the process flow in Apache Spark Architecture.

Spark is the most popular platform used by many organizations and vendors for large-scale data processing.

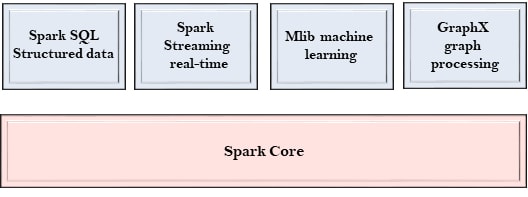

The key components of Spark are as follows:

- Spark SQL

- Spark Streaming

- MLlib

- GraphX

- Spark Core

Spark SQL is the most prominent Spark module designed for structured data processing. Spark SQL interfaces provide more information for the Spark about the structure of computation and data. To perform extra optimizations Spark SQL uses this extra information. The key use of Spark SQL is to execute SQL queries and also used to read data from the existing Hive installation. Spark SQL supports both ODBC and JDBC connections. Moreover, it also supports various sources of data like JSON, Parquet, and Hive Tables.

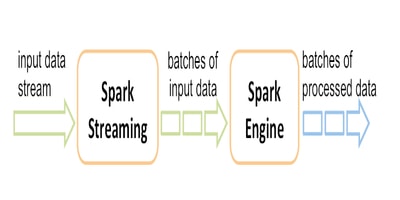

Spark Streaming is the essential component of Apache Spark. This component is also considered as an extension of the Spark API. The main function of Spark Streaming is to enable fault-tolerant, high-throughput, and scalable processing of data streams. Spark streaming also helps you in representing a continuous stream of data using Spark High-level abstraction called DStream. It also lets you reuse the same code for batch processing.

There are two main libraries present in Apache Spark and they are MLlib and GraphX. The MLlib is the scalable and most prominent machine learning library of Spark. This library consists of various learning algorithms and utilities such as collaborative filtering, regression, classification, dimensionality reduction, clustering, and many more. Furthermore, it also contains underlying optimization primitives like statistics, transformation, extraction, data types, etc.

The GraphX library in Apache Spark is used to perform graph-parallel computations and manipulate graphs. It consists of the growing collection of graph algorithms. Spark RDD is extended by GraphX with Resilient Distributed Property Graph.

The comparison between Apache Spark and Hadoop is as follows:

Apache Spark | Hadoop |

Apache Spark is one of the most prominent unified analytics frameworks designed for Big Data Processing. | Apache Hadoop is the popular open-source platform. It is a collection of software utilities. It also provides a software framework for the processing of big data and for storage distribution. |

Apache Spark is written in Java Programming and Scala programming | Apache Hadoop is written and developed in Java Programming |

It is compact and it is easier compare to Hadoop | Lengthy and complex |

Apache Spark speed is 100x times faster than MapReduce | It is faster than the traditional system |

It is Open Source | It is open source |

It involves real-time processing, batch processing, graph processing, etc. | It involves batch processing |

Apache Spark is a popular unified analytics framework used for processing large-scale data workloads. It uses an in-memory caching mechanism for faster queries. Spark framework is also used for creating data pipelines, run distributed SQL, rum machine learning algorithms, a lot more.

Spark Framework is a free and open-source web application platform designed for Big Data Processing. Apache Spark can also distribute large-scale data processing tasks across multiple computers using distributed computing tools or on its own. Spark provides an alternative for developers who want to develop web applications. It is the rapid and fastest development framework for processing big data.

Spark context Parallelize method is mainly used to create a personalized collection. This Spark parallelism allows Apache Spark to distribute the data among different nodes to avoid depending on a single node to process the data. The Spark context parallelize method is represented in the following manner sc.parallelize(). In Spark, when the task is parallelized means that the concurrent tasks are running on the worker nodes.

Looking for Best Apache Spark Hands-On Training?

Get Apache Spark Practical Assignments and Real time projects

A Spark Partition is a logical chunk of the logical division of data that is stored on a node in the cluster. Using partitions you can distribute the data among various clusters. The name itself gives the meaning that the partition is the division of tasks or anything into smaller parts. It is considered as the essential unit of parallelism in Apache Spark. Moreover, the partition in Spark will also help in reducing the memory requirements for each node.

An Action in Spark is the key operation of RDD. They are mainly used to access the actual and accurate data present in RDD. The key role of Action is to return the value to the driver program after running a computation on the dataset. The output of the transformation RDD operation is considered as the input of Action operation. Actions are used to return the result for the specific action performed in Spark.

Spark Core is considered as the central unit of functionality in Apache Spark. It is a key essential component of Apache Spark and it is a base unit for any kind of project you deal with. It is named as the distributed execution engine with all the functionalities. It provides a various set of functionalities like memory management, task dispatching, fault tolerance, task scheduling, in-memory computation, and many more.

Apache Spark RDD – Resilient Distributed Dataset is formally considered as a fundamental data structure of Spark. The Apache Spark entirely revolves around the Resilient Distributed Datasets (RDDs). It is defined as the immutable collection of elements or records partitioned across the various nodes of a cluster. Using RDD the execution of various parallel operations becomes easier. There are two different ways to create Spark RDD, the first way is parallelization of existing data in the driver program and the other is through referencing a dataset in an external storage system. Spark makes use of the RDD concept to achieve efficient and faster MapReduce operations. RDD supports in-memory processing computation. Data sharing is faster in Spark RDD when compared to other networks or a disk.

There are two main operations provided by RDD. They are:

- Transformation

- Action

The transformation operation key goal is to create a new dataset from the existing one. The transformation is a bit lazy because it only performs computation when the action requires the result to return to the driver program. The frequently used RDD transformations are as follows:

- mapPartitions(func)

- union(otherDataset)

- map(func)

- flatMap(func)

An Action in Spark is the core operation of RDD. The key role of Action is to return the value to the driver program after running a computation on the dataset. Actions are used to return the result for the specific action performed in Spark. The output of the transformation RDD operation is considered as the input of Action operation. They are mainly used to access the actual and accurate data present in RDD. The frequently used RDD actions are listed below:

- count()

- reduce(func)

- take(n)

- first()

- takeOrdered(n, [ordering])

- saveAsSequenceFile(path)(Java and Scala)

- collect()

Spark Driver program plays a key role in launching various parallel operations on the cluster. The driver in the context of Spark is also responsible for converting an application into smaller units called tasks. It is the program used to declare the actions and transformations on RDDs and also submits such requests to the master.

The built-in functions in Spark are listed below:

- Cartesian Function

- Filter Function

- First Function

- reducedByKey Function

- Co-Group Function

- Intersection Function

- Union Function

- Count Function

- Map Function

- Distinct Function

- Take function

In Spark memory tuning there are three considerations. The considerations are as follows:

- The memory used by the objects

- The overhead of garbage collection

- The cost of accessing those objects

Spark Parquet is the best and good looking columnar format designed and structured in a good format. The Parquet in Spark is supported by many data processing systems. When anyone who is reading the Parquet files, all the columns in the file are converted to nullable automatically for a compatibility reason. The support is provided by Spark SQL for both reading and writing Parquet files.

The key features of Spark are as follows:

- Provides high performance for both streaming data processing and batch processing

- Easy to use

- Fast processing speed

- Enhanced support for sophisticated analytics

- Real-time stream data processing

- Flexible enough

- Provides a collection of libraries

- Hadoop Integration

- Supports multiple formats

Become Apache Spark Certified Expert in 35 Hours

Get Apache Spark Practical Assignments and Real time projects

The languages that are supported by Apache Spark are R programming, Java, Python, and Scala. Scala is the most popular language because Spark is written and developed in Scala.

YARN is abbreviated as Yet Another Resource Negotiator. It is the most popular Apache Hadoop Technology. YARN is a distributed operating system designed for big data applications. It has the capability to decouple scheduling capabilities and MapReduce Resource management from the data processing component. It provides a central resource management platform for the spark to provide scalable operations across the cluster.

The Spark executor is used to start the Spark application when it receives the event each time. Configuring the Spark executor will specify the cluster manager used with Spark. The SparkContext also sends all the tasks to the Spark executor to run. They are launched only at the start of the Spark Application.

The Sparse Vector in Apache Spark is mainly used for saving space by storing the non-zero entries. It is represented by a value array and index array.

Sparse Vector Constructor and its syntax

SparseVector(int size, int[] indices, double[] values)

Accumulators are the key variables available in Spark and are added only through an associative operation. They are efficiently supported in parallel and are used to implement sums and counters. Numeric type accumulators are supported by Spark.

Spark High-level abstraction called DStream ( Discretized Stream) is used by the Spark Streaming component to help you in representing a continuous stream of data using Spark High-level abstraction called DStream. A continuous stream of RDD is known as DStream and it is a continuous abstraction.

DStream Constructor and its Description

DStream(StreamingContext ssc, scala.reflect.ClassTag<T> evidence$1)

The various data sources available in Spark are listed below:

- Parquet Files

- Generic File Source Options

- Avro Files

- Whole Binary Files

- JSON Files

- ORC Files

- Hive Tables

Basically Spark uses Akka for the purpose of Scheduling and for establishing a messaging system between the worker and a master.

The following are the various MLlib tools in Spark:

- Pipelines

- Utilities

- ML Algorithms

- Load and save algorithms

- Featurization

The following are the key uses of Apache Spark.

- Data Integration – Spark is used to reduce time and cost for the Extract, Load, and Transform (ETL) process.

- Real-time Stream Processing

- Spark for Machine Learning

- Fog Computing

- Spark for Interactive Analytics

Become a master in Apache Spark Course

Get Apache Spark Practical Assignments and Real time projects

The most prominent users of Spark are as follows:

- Yahoo

- Conviva

- Uber

- eBay

- Alibaba

The cluster managers available in Spark are as follows:

- Hadoop YARN

- Standalone Mode

- Kubernetes

- Apache Mesos

The name itself gives the meaning that the process of evaluating is done only when the action is triggered. In Spark, the lazy evaluation comes into the picture when you deal with RDD’s transformation operation. The transformation is a bit lazy because it only performs computation when the action requires the result to return to the driver program. The lazy evaluation ensures the avoidance of unnecessary CPU and memory usage that occur due to some errors.

The levels of Persistence in Spark are as follows:

- MEMORY_ONLY

- MEMORY_AND_DISK_SER

- DISK_ONLY

- MEMORY_AND_DISK

- MEMORY_ONLY_SER

- OFF_HEAP

PageRank is an algorithm present in GraphX. PageRank is used to measure each vertex present in a graph. For example, if you look at various social media platforms, the user with many followers will be ranked highly. It keeps on working and provides top results on a specific platform.

Looking for Apache Spark Hands-On Training?

Get Apache Spark Practical Assignments and Real time projects

Our Recent Blogs

Related Searches