MapReduce Interview Questions and Answers

Share This Post

Best MapReduce Interview Questions and Answers

Are you in search of top Hadoop MapReduce Interview Questions and Answers? Are you really excited to land in the best place? CourseJet is the best platform in which you can find the most frequently asked interview questions and answers framed by experts. In this blog of MapReduce Interview Questions and Answers, you will find questions related to various concepts like Apache Hadoop, MapReduce, HDFS, YARN, etc. Learning these questions will help you gain expertise in various concepts of Hadoop MapReduce and you can also crack the interview easily. These MapReduce Interview Questions and Answers are frequently asked by interviewers and are the best fit for both freshers and experienced professionals.

Top MapReduce Interview Questions and Answers

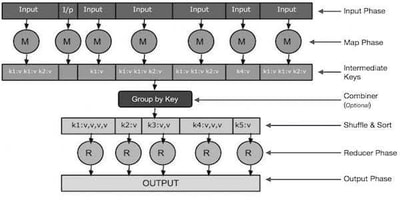

Hadoop MapReduce is the most popular software framework used to perform parallel processing on vast amounts of data on large clusters. This framework is used for easily writing applications that process vast amounts of data. Mapreduce is composed of a map procedure and a reduce process. The map is used to perform sorting and filtering. The reduce process is used to perform a summary operation.

Shuffling is the process through which the intermediate output from the mappers is transferred to the reducer.

Apache Hadoop is the most popular open-source software. It is the collection of open-source software utilities. It is designed and developed by Apache Software Foundation and written in Java. Hadoop also provides a framework for processing big data and distributed storage using MapReduce Programming Model. Hadoop allows you to quickly analyze massive amounts of datasets in parallel.

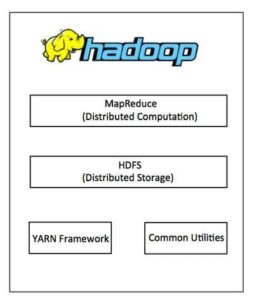

The core components of Hadoop are as follows:

- HDFS

- MapReduce

- YARN Framework

HDFS stands for Hadoop Distributed File System. It is one of the core components of Hadoop. This distributed file system is developed to run large datasets on the commodity hardware. It is quite similar to the existing distributed file systems. HDFS is highly fault-tolerant and high throughput and it is designed to deploy on low-cost hardware.

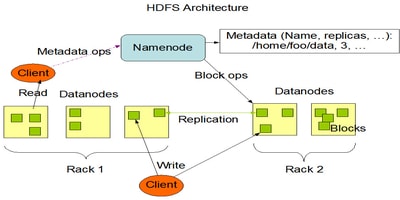

HDFS is one of the core components of the Hadoop Ecosystem. This distributed file system is designed to run large datasets on commodity hardware. Hadoop Distributed File System follows the master-slave architecture. The core HDFS cluster consists of a master server called a NameNode that mainly manages the file system namespace and also regulates access to files by clients. DataNodes are also present in HDFS Architecture that is used to manage storage attached to the nodes they run on.

The NameNode is used to execute the file system namespace operations like renaming, opening, and closing files. The serving of read and write requests from the client are managed by DataNodes. The HDFS is developed using Java Programming and the added advantage is you can deploy HDFS on any machine that supports Java and can also run DataNode or NameNode Software. To create a file in HDFS the user needs to interact with the NameNode.

In Hadoop MapReduce, the partitioner is used to partition the key space. It also controls the partitioning of key values of the intermediate map outputs. The subset of the key is used to derive the partition by the hash function. The key role of the partitioner is to ensure all the values of a single key reach the same reducer. It also helps in the distribution of map output over the reducers.

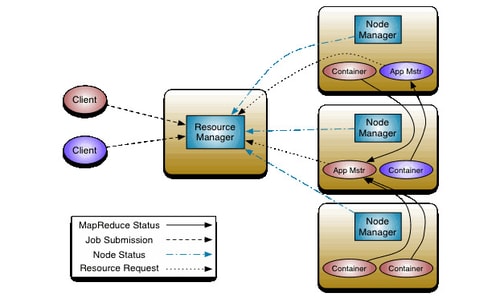

YARN stands for Yet Another Resource Navigator. It is the essential component of Apache Hadoop. The main aim of Hadoop YARN is to split up the functionalities of job scheduling and resource management into separate daemons. The below image provides a nutshell view of YARN Architecture.

The ResourceManager has the authority to distribute resources among all the applications in the system. The NodeManager is responsible for monitoring containers in cases like resource usage in different storage devices and then report the same to the ResourceManager. YARN supports resource reservation via a component called ResourceSystem.

The significant difference between MapReduce and Spark are as follows:

Hadoop MapReduce | Spark |

Hadoop MapReduce is the most well-known software framework used to perform parallel processing on vast amounts of data on large clusters. | Spark is an open-source cluster computing framework. This engine is used for big data processing and machine learning. |

It is written in Java Programming language | It is written in Scala |

It involves batch processing | It involves real-time, batch, interactive, iterative processing. |

Lengthy and complex | Compact and easy to use |

Low cost | It is expensive |

Libraries are not present, only separate tools are used. | Libraries such as MLlib, GraphX, etc are present in Apache Spark |

A combiner in MapReduce is known as semi-reducer or mini-reducer. The key function of Combiner is to increase the MapReduce program efficiency and gathers the map outputs of the same key. It is considered as the optional class in MapReduce because it operates by taking inputs from the Map class and then passes the output key-value pairs to the Reducer class.

Looking for Best MapReduce Hands-On Training?

Get MapReduce Practical Assignments and Real time projects

Hadoop MapReduce Framework is most prominently used for distributed computing. This framework will allow you to write various applications that process vast amounts of data. Mapreduce is composed of a map and a reduce process. The map process is used to perform sorting and filtering. The reduce method is used to perform a summary operation.

MapReduce is the key component of Apache Hadoop. The main job of MapReduce is to split the different input datasets into independent chunks. All these input tasks are processed by a map in a parallel manner. After a while, the Hadoop MapReduce framework sorts the outputs of the maps because they act as input for the reduce process. MapReduce is used for performing fast data processing in a distributed application.

Hadoop MapReduce Paradigm is the prominent programming paradigm. It was designed to allow parallel distributed processing of large sets of data. These large data sets are then converted into tuples and are reduced to smaller sets. This MapReduce Paradigm will reduce the data down to smaller datasets.

The Hadoop Distributed File System follows master-slave architecture. The master in HDFS is the NameNode. It mainly manages the file system namespace and also regulates access to files by clients. In HDFS, the data will be stored (only metadata) as per the instructions provided by NameNode. The NameNode can also manage one or more DataNodes.

Apache Sqoop is the most popular tool in Hadoop. Its main goal is to transfer the bulk of data between Apache Hadoop and other external data stores such as relational databases, data warehouses, etc. It also imports the data from different external data stores to Hadoop Ecosystems like Base, Hive, HDFS, etc.

MapReduce Job is described by a user using the primary interface JobConf. The JobConf is typically used to specify the mapper. The main job of the MapReduce programming model is to split the different input datasets into independent chunks. All these input tasks are processed by a map in a parallel manner.

Speculative Execution plays a key role in the Hadoop Ecosystem. This process of execution takes place only during the slower execution of tasks. In this process, the master node executes the other task instance on another node. The task which is completed first will be executed and other task execution will be stopped by killing it. Speculative Execution is mainly used to percent the delay that is incurred by doing the work.

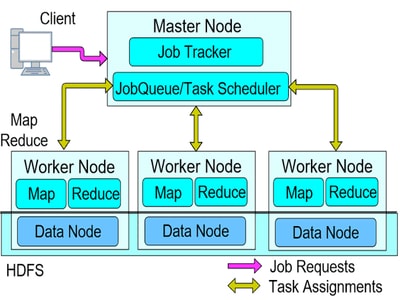

The JobTracker is the key service in Hadoop. It is used to farm out all the MapReduce tasks to the specific nodes in a cluster. It also determines the location of data by talking to NameNode. The disadvantage of JobTracker is that it is a point of failure for Hadoop MapReduce because it goes down all the jobs that are halted.

The comparison between RDBMS and Hadoop are as follows:

RDBMS | Hadoop |

A Relational Database Management System is used to arrange the data in tables in a proper manner. | Apache Hadoop is the most popular open-source software. It is the collection of open-source software utilities. |

Structured Data Types | Unstructured and Multi Data Types |

Integrity is very high | Integrity is very low |

In RDBMS Reads are fast | In this the Writes are fast |

Processing speed is limited and there is no data processing | In Hadoop, the processing is coupled with data |

Scaling is Non-Linear | Scaling is Linear |

The main aim of Mapper or Map in Hadoop is to process the input data and then it creates small independent chunks of data. It is also used to perform sorting and filtering. The key function of Reducer is to process the outputs provided by Mapper by considering them as inputs for it. The output generated by the reducer is the final output and it is stored in HDFS.

Become a master in MapReduce Course

Get MapReduce Practical Assignments and Real time projects

WebDAV stands for Web Distributed Authoring and Versioning. It is an extension used by Hypertext transfer protocol (HTTP). It also helps you in performing various operations related to web content Authorisation. It is a protocol that provides a framework to the users for creating, changing, and moving the document on any particular server. You can access the standard HDFS filesystem by exposing the HDFS over WebDAV. In some operating systems you can mount the shares of WebDAV as the filesystem for accessing HDFS.

In the Hadoop Ecosystem, InputSplit is used to represent the data to be processed by the Mapper. It presents the byte-oriented view on the input data.

In Hadoop, the TaskTacker acts as a node in the specific cluster. The key role of TaskTracker is to accept the tasks from a JobTracker in Hadoop MapReduce. It is configured with a set of slots to indicate how many tasks can be accepted. Furthermore, it also sends heartbeat messages to the JobTracker to cross-verify the JobTracker is still alive.

Sorting technique is used to sort all the map outputs to the reducers as inputs. It helps reducers to easily distinguish the key-value pairs and to generate the final outputs.

The MapReduce program is executed in three different phases and they are as follows:

- Reduce Stage

- Map Stage

- Shuffle Stage

Mapper class is the base class in Hadoop MapReduce. The implementation of Map tasks are done using the Mapper class in Hadoop MapReduce.

Mapper Class Syntax:

Class Mapper<KEYIN,VALUEIN,KEYOUT,VALUEOUT>

InputFormat is the first component of Hadoop MapReduce. The main function of InputFormat is to create input splits and then divide them into records. RecordReader is defined using InputFormat that is responsible for reading records from input files. It also validates the input specification of the MapReduce Job.

The common input formats defined in Apache Hadoop are as follows:

- Sequencefileinputformat

- KeyValueInputFormat

- TextInputFormat

To read the files in sequence the Sequencefileinputformat is used in Hadoop MapReduce.

Representation

- Class SequenceFileInputFormat<K,V>

The parameters of Mapper are listed below.

- Text and IntWritable

- LongWritable and Text

The advantages of Hadoop MapReduce Programming are:

- Hadoop is highly scalable

- Hadoop MapReduce is flexible enough for organizations to process a vast amount of data.

- Mapreduce Programming provides a cost-effective solution for businesses.

- Fast and easy to use

- Proper Security measures and Authentication

- Distributed Parallel Processing

- Mapreduce – simple model of programming.

The usage of MapReduce in different areas is very astonishing because it has become a solution for many fields or aspects.

- Log Analytics

- Fraud Detection

- Data Analysis

- Social Networks

- E-commerce

Looking for MapReduce Hands-On Training?

Get MapReduce Practical Assignments and Real time projects

Our Recent Blogs

Related Searches

mapreduce interview questions mapreduce interview questions and answers mapreduce engineer interview questions mapreduce interview questions and answers for experienced mapreduce interview questions for 3 years experience mapreduce interview questions pdf mapreduce interview questions and answers for experienced pdf mapreduce basic interview questions mapreduce interview questions for experienced mapreduce interview questions and answers pdf mapreduce interview questions for freshers mapreduce real time interview questions mapreduce manager interview questions mapreduce interview questions for 2 years experience mapreduce interview questions and answers for freshers top mapreduce interview questions

mapreduce interview questions and answers mapreduce interview questions and answers pdf mapreduce interview questions and answers for experienced mapreduce interview questions and answers for experienced pdf hadoop mapreduce interview questions and answers mapreduce interview questions and answers for freshers